Summary: Microsoft will lead in enterprise AI, leveraging ‘data gravity’, i.e. the vast corporate data already stored on its platforms. Join the discussion on LinkedIn.

the most successful approach to limit large language model (LLM) hallucinations is currently RAG: Retrieval Augmented Generation. We basically ask the LLM to only use information we ourselves provide in the prompt. We tell it: “hey, here are a bunch of paragraphs and the answer should be somewhere in there, come back with a coherent answer”. This has several advantages because this way we can:

– use private, up-to-date and reliable data that was never seen by the LLM

– know where the information is coming from (trust)

– used a dataset that is much larger than what can fit in one chat prompt. Even the GPT-4 context size of 128K tokens is nowhere near what we need for an enterprise application. In addition, testing shows that response quality drops off significantly as you use more of the context (anything < 73K looks fine though which is still impressive). cf. link in the comments.

to select which information to put in the prompt we need to pre-process our dataset. The process involves:

– cut all our documents in smaller pieces (’chunks’), then

– use a neural network on each piece to determine a series of numbers that represent its meaning (an ‘embedding’) and

– store all those embeddings in a database

When we now want to ask a question to our datastore we

– convert our *question* into an embedding

– look in database for chunks with similar meaning (cosine similarity), then

– rank and select diverse text snippets

– put the snippets in the prompt

– let the LLM find the answer in the provided text

This whole area is quickly evolving. Improvements are being made in each of the steps, smarter chunking, better embeddings, better searching (e.g. hybrid search with keywords), better ranking, better prompts, etc.

Startups using RAG faced challenges with OpenAI’s custom GPTs that made it super easy to create your own simple chatbot with private information. For enterprise use the current OpenAI functionality is lacking because:

– GPTs leak your private datasets to the world

– you cannot set access control on documents

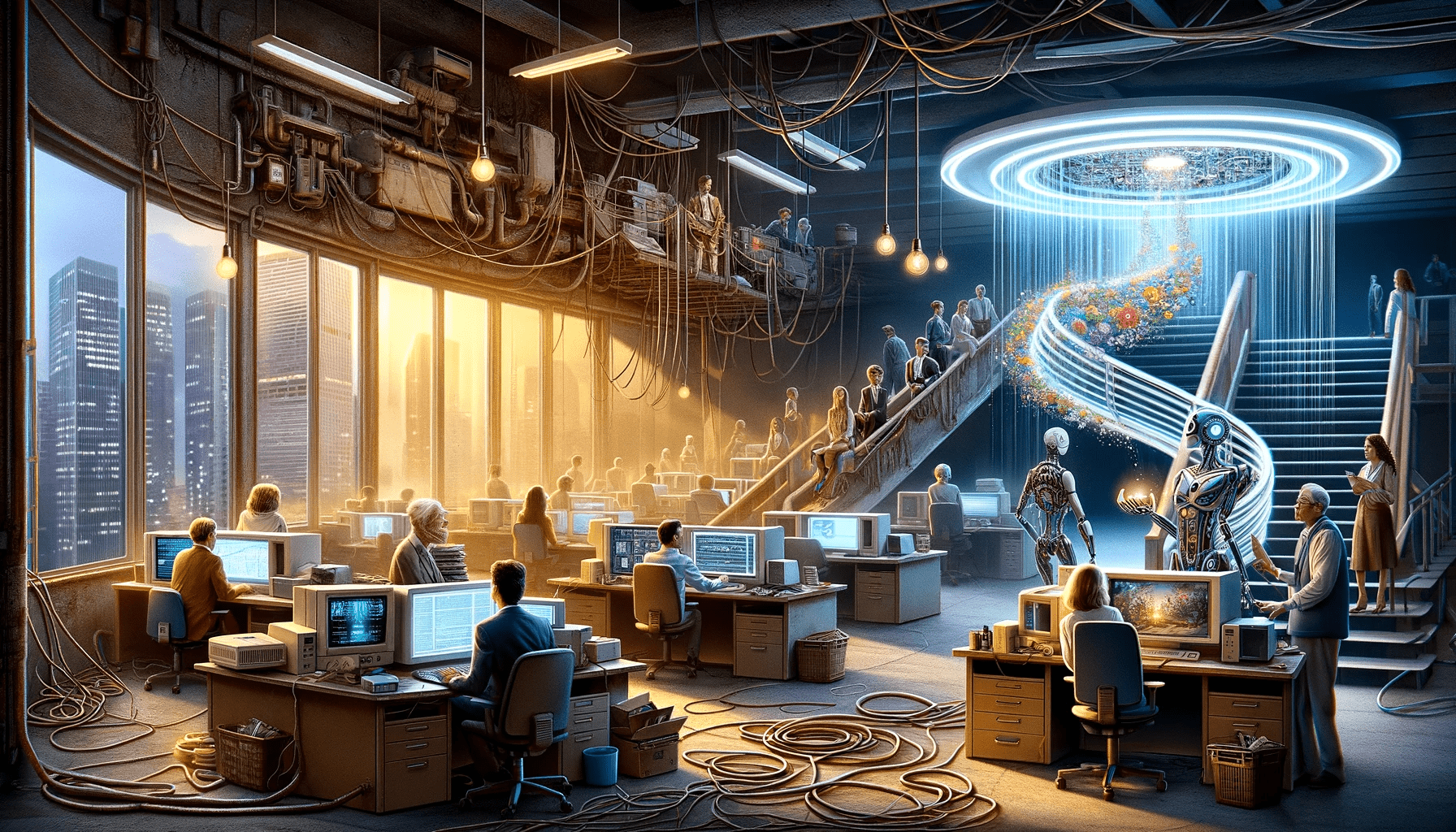

It’s getting even harder for the startups now that Microsoft has launched its Copilot Studio, a generative AI evolution of their chatbot platform. Notably they have added access control so a user will only get answers from documents they have already access to, for example on OneDrive. This illustrates Microsoft’s profound comprehension of enterprise needs, complemented by its strategic utilization of its extensive corporate reach. This dominance, enhanced by the diminished trust resulting from the Sam Altman firing fiasco, puts Microsoft in pole position for leadership AI applications.